Exploratory Testing: The benefits of applying this process to my team

The positive outcomes that this practice can bring to your process

There is a time I was studying the challenge of improving the quality assurance of software delivery in a way that all the team (and also invited people from other domains) could join the testing of a software. So then I found the brilliant Exploratory Testing, which I could present to my colleagues, and then we applied it to our process. Since then, I kept recording all the data I could get from each session and finally proved that this process increment was fantastic. This article is about how we adapted it to our team and the outcomes and learnings we got from it.

Automation is not sufficient!

Scripted regression tests are automated — the Continuous Integration (CI) system executes these tests with every build. And it for sure gives fast feedback that Agile teams need to deliver frequently at a sustainable pace. Although they are really important to prevent bugs as they are focused on the execution of the software and its expected results, they are non-thinking activities that are not exactly sufficient to ensure that the system does everything we expect and nothing we don’t.

Agile teams need a manual testing approach that is:

- Adaptable (software changes very quickly)

- Produces large amounts of information quickly (fast feedback).

Exploratory Testing is a perfect fit: We move through the software rapidly, poking and prodding to reveal unintended consequences of design decisions and risks we didn’t consider in advance. Don’t worry, we are going to talk a bit more about it :)

The perfect fit: Exploratory Testing

Some people understand it as “Do random stuff and see what happens”. But no, this is not the idea of Exploratory Testing.

In fact, it is a rigorous investigative practice, in which we use the same kinds of test design analysis techniques and heuristics that we do in traditional test design, but execute the tests immediately. Also, it is a testing approach that emphasizes the freedom and responsibility of the individual tester.

The test design and execution become inseparable, a unique activity — and everything connect with learning. It’s learning together to build better products. It’s creating an environment that enables a continuous “testing is learning” loop, in which you can use the outputs as inputs.

And why it is valuable?

- Share domain knowledge between all the testers;

- Feedback to the developers as soon as possible;

- Different perspectives (Devs, QA, designers, PO/PM, other teams, etc…);

- Evaluate the usability and UX of a product;

- Log potential new test ideas and product ideas;

- Change and adapt our tests based on the results;

- Results give value to the project, thus providing instant, and reliable information about the test item we are working with.

Our adapted Exploratory Testing sessions

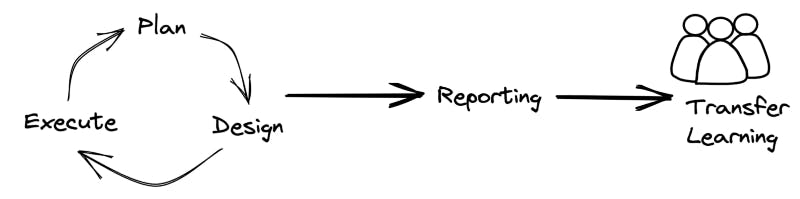

After studying a bit about how each type of Exploratory Session works, I proposed for our team a customized version of the Session-Based Exploratory Testing. This approach gives us some directions so we can focus our work at hand, either by prioritizing it by risk, or by the degree of importance to the project, or by the functionality of the test item, among others.

Advantages of the Session-Based Exploratory Testing

Based on our experience, it was a way to keep all the participants updated and used to what we are doing, as we invite them to explore the proposed areas. Each session improves the coverage of our future test sessions, by using the knowledge acquired from the results of our past test sessions. We finish having several new scenarios, possible issues and bugs, hints of new things to test in upcoming sessions, new product ideas, and lots of gained knowledge!

How we implemented it in our team

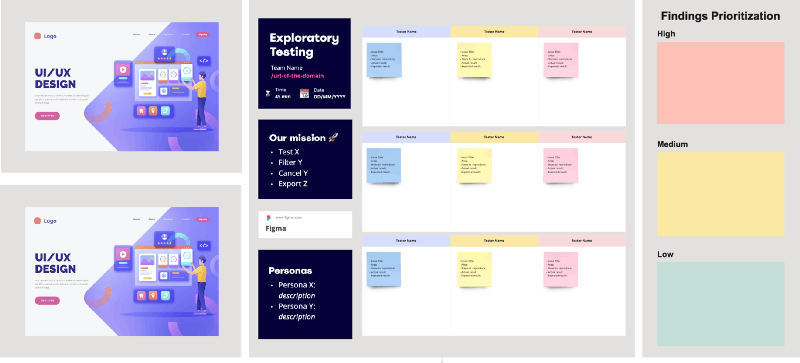

Basically, for each session that I schedule with the team, we define a Mission, which covers the purpose we are there — what is expected of us to test. The areas of testing are also designed, and when needed, the personas/strategies each tester will follow.

The timebox for each moment is also defined and explained at the very beginning of the session — the minutes we are going to spend executing our designed test, and the amount of time we are going to dedicate to the briefing moment. For the testing moment, I always highly recommend everybody to dedicate an amount of time to focus on the charter (the mission) — around 80%, and 20% on the opportunity, which represents off-charter work of areas that could be potentially risky or retest other areas that could be potentially risky. Usually, our sessions take around 45 to 60 minutes (and it is considered a small session!). And we use a shared Spotify team playlist during the testing execution :D

All the participants, during the testing, create tickets on our board with the things they found — can be bugs, issues, proposals, ideas, etc. They don’t need to exactly define in the first moment if they really found a bug, but just need to flag out the findings. Later, after finishing the testing and during the briefing time, all the testers are invited to explain what they did find or what they just wanna bring to the discussion. This is a very valuable moment, in which the product team can catch some urgent actions, nice-to-have increments, possible new features, and also some tickets for our backlog. Also, it is a good moment to pass information and product knowledge to the ones who testes the software —continuous learning.

An example of the session framework we created! :)

The Outcomes

First of all, I must say that it may look a bit hard to apply, but it is not. It took time for me to organize the methodology, but is incredibly easy to get and to learn from each session — It helped our team to have a better understanding of the product and created an investigative mind in each of our integrants, things we felt in the next sprints ahead. We started to not only do the sessions for our team, but expand for the tribe and other domains — for example, the Design System team, or the other teams inside the tribe where some of their integrants have never ever seen what we were doing till there.

In a matter of some months, our team was already 100% onboarded with the framework that they started to improve the process — proposing better charters, exploring more, creating the testing mentality, and their own way to design their tests. The sessions started to be independent, guided not only by me but by the entire team.

I want numbers!

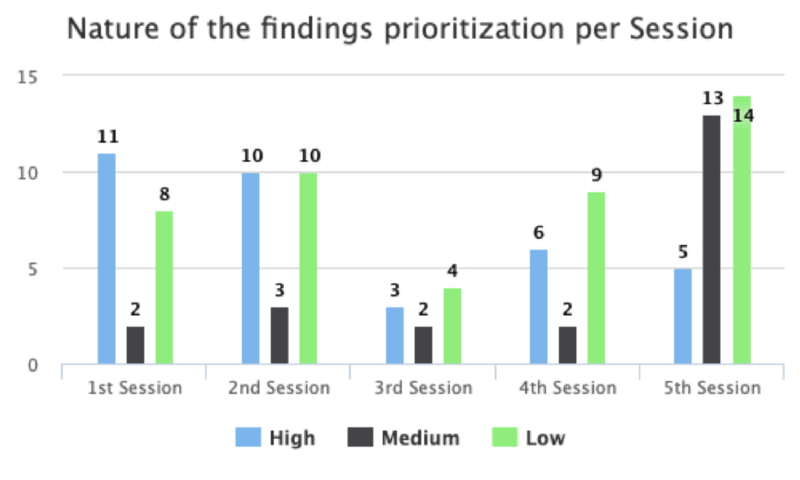

It is common to have a board with many (and sometimes repeated) reports, but usually, just a small percentage are considered show-stoppers. The reports, after the session, are then evaluated by the team to understand their nature (if it is a bug, issue, possible new feature, etc), and organized in the Findings Prioritization column, so then we can organize how and when to tackle them.

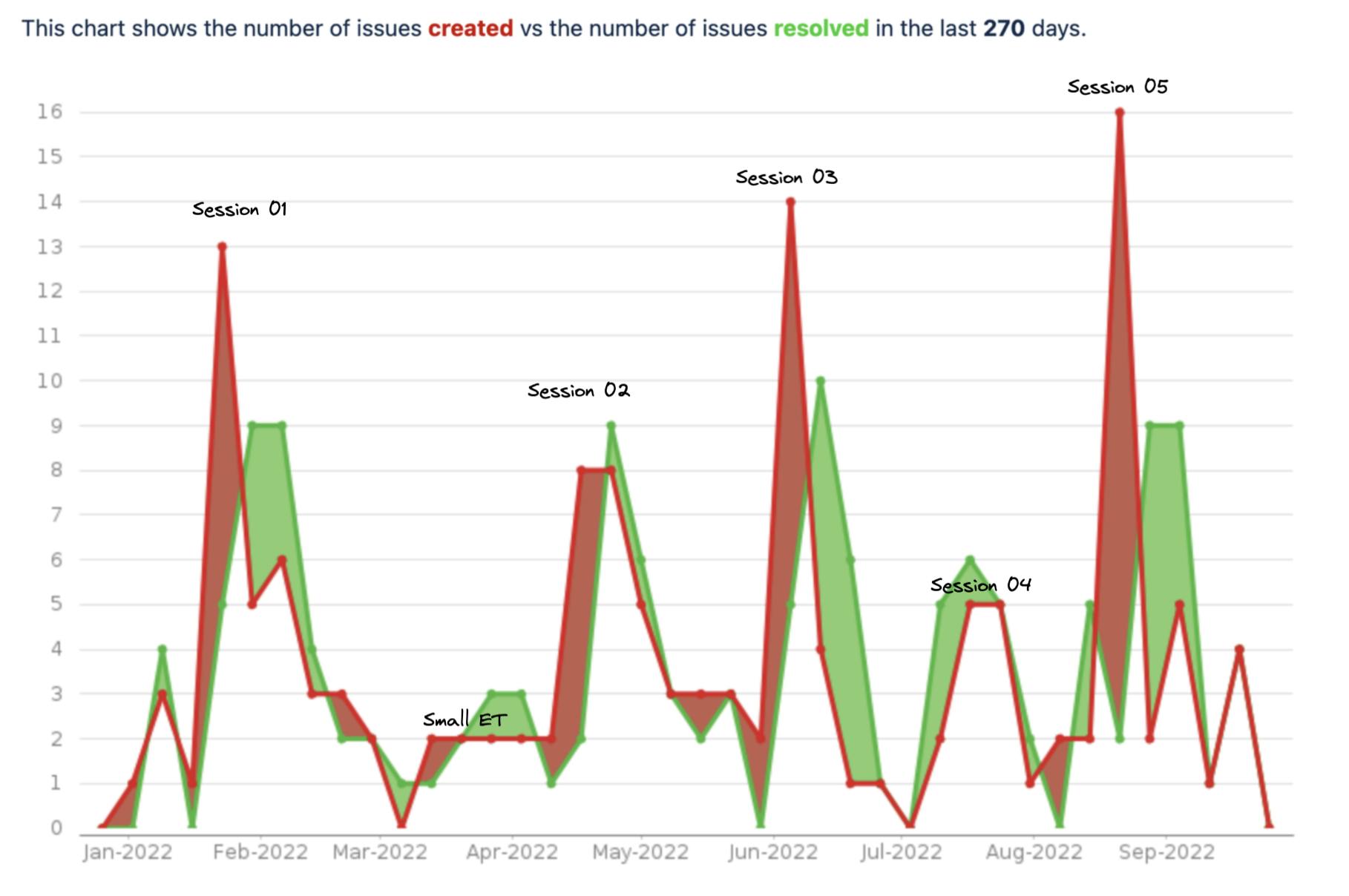

I plotted this chart based on our last 5 sessions, which contains the number of the findings that we decided to tackle as a team separated by prioritization in order to deliver new features and parts of our software. As said, those are the ones selected to be tackled, but there were lots of other findings that were questions, new product ideas, doubts regarding the new functionality, and on.

This chart reveals exactly how each session is flagged in the "Bugs Report" for our team. We are running the Exploratory Testing before each release, with an average of 12 testers (being from the team or invited members) to grant a better quality of the release. Nowadays, this practice is a must in our team - and even when our team works on other initiatives, we negotiate it as a practice that we want to be applied in the process, sharing this knowledge with all the company.

Why does the full team works on that? We are the team players and owners of a product, from top to bottom, responsible for the quality of the delivery, and it is a team job to ensure that the product is ready to be launched. It is part of the process. And sometimes the process does not work as expected, so then we work to improve it. There is no easy and direct way to make things work in Agile development — sometimes it is a big failure and the process is a big mess, so we keep improving, learning, and adapting it.

I call it “building a quality assurance mindset”.

References I used to learn :)

- https://less.works/papers/et.pdf

- https://medium.com/@martial.testing/session-based-exploratory-testing-a-first-approach-ad88fce9056e

- https://gareth85.medium.com/a-win-win-win-situation-on-exploratory-testing-19b0018ee285

- https://medium.com/ingeniouslysimple/how-to-run-an-exploratory-ux-testing-session-8e9d290c175

- https://www.softwaretestinghelp.com/what-is-exploratory-testing/

- https://medium.com/quintoandar-tech-blog/https-medium-com-manoelamendonca-a-guide-to-starting-bug-bashing-now-3c5da094773